The app responsiveness drives the user satisfaction. The common bottleneck in achieving the quick response time is the backend application of the client app. Network requests and their processing by the backend are inherently slow.

Solution

Developers constantly look for ways to improve the performance of the server code when executing the database queries. Indexes, query hints, and beefier database servers can help a lot.

The best technique is to avoid “talking” to the database at any cost!

The Data Aquarium Framework in the foundation of applications created with Code On Time implements the controller-level caching. Developers identify the information that does not change frequently and specify the caching rules in ~/app/touch-settings.json configuration file.

Consider the following changes in the configuration of the backend application introduced in the RESTful Workshop. The server.cache.rules key defines the caching rules that will make the framework to reuse the previously constructed responses to the requests fetching the Categories and Suppliers data.

{

"server": {

"cache": {

"rules": {

"suppliers|categories": {

"duration": 1

}

}

}

}The responses to the requests to fetch data will be placed in the server cache and will stay there for 1 minute. The framework looks up the previous response to a request in the cache. It performs the database queries only if the response is not found. The cache manager removes the stale responses automatically.

The names of the caching rules are the case-insensitive regular expressions evaluated against the data controller names. In this instance the single rule suppliers|categories covers both controllers. The duration property specifies the number of minutes the data will remain in the “cached” state. Set the duration to 1440 to keep the responses in the cache for 24 hours.

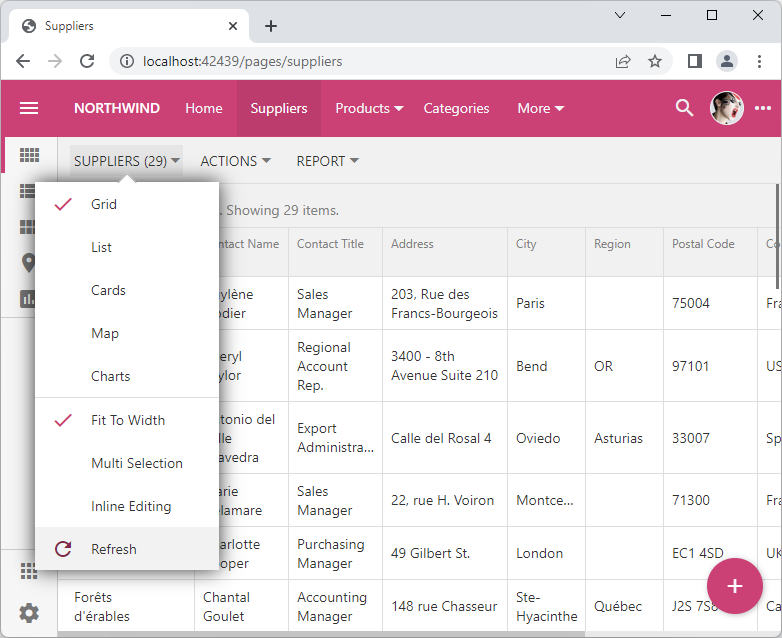

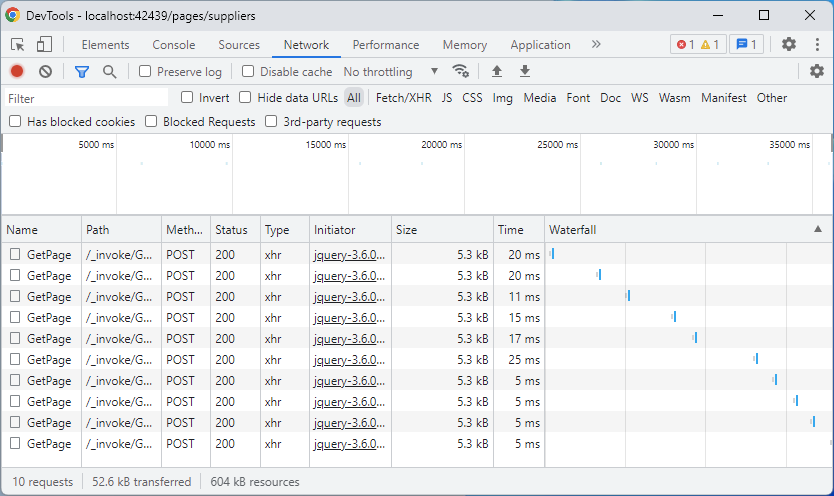

Let’s evaluate the impact of the universal caching by invoking repeatedly the Refresh command in the list suppliers of the backend application.

The network monitor shows the first five Refresh requests taking between 11 and 20 milliseconds. The request #6 was performed when the caching rules were entered in touch-settings.json configuration file. The remaining requests show the consistent response time of 5 milliseconds. Caching provides a 70% improvement in the average response time in this particular application.

Numerous conditions will impact the performance of the database queries. For example, the response will take longer to produce if the database is on the remote server, the server is under heavy load, or the query is complex.

The cached responses will always have a consistent response time.

The categories and suppliers of the products are changed infrequently and are the perfect candidates for caching. The product inventory is changing frequently and shall not be cached.

Do not use caching with the data that cannot be reused. For example, the list of orders of a customer is unique. Its caching is not likely to improve the overall performance.

The cached response must not depend on the user identity or include the user-specific data.

Exemptions

Caching of the lookup data improves performance but introduces the unique challenge. How does one go about making changes to the cached data items? For example, backend administrators will have a hard time while entering the new suppliers or editing the existing ones. The frontend will keep presenting the “unchanged” information. It will take at least a minute for an application to start displaying the new data with the caching configuration presented above.

Caching rules can be enhanced with the exemptRoles and exemptScopes keys specifying the user roles or OAuth 2.0 scopes that are not affected by caching.

{

"server": {

"cache": {

"rules": {

"suppliers|categories": {

"duration": 1,

"exemptRoles": "Administrators",

"exemptScopes": "inventory.write"

}

}

}

}Multiple roles are separated with commas while multiple scopes are separated with spaces.

Caching lookup is not performed if the user identity matches either exemptRoles or exemptScopes.

Administrators will not feel the improved performance associated with caching since they are exempt from having their own requests to fetch suppliers and categories to undergo the “caching” treatment. On the other hand they will likely be the only users physically reading and writing the data that is retrieved from the cache for everybody else. Making edits to the data will have a familiar flow.